Secure RAG Deployment

-

Martin Harrod

Martin Harrod - 01 Sep, 2024

Overview

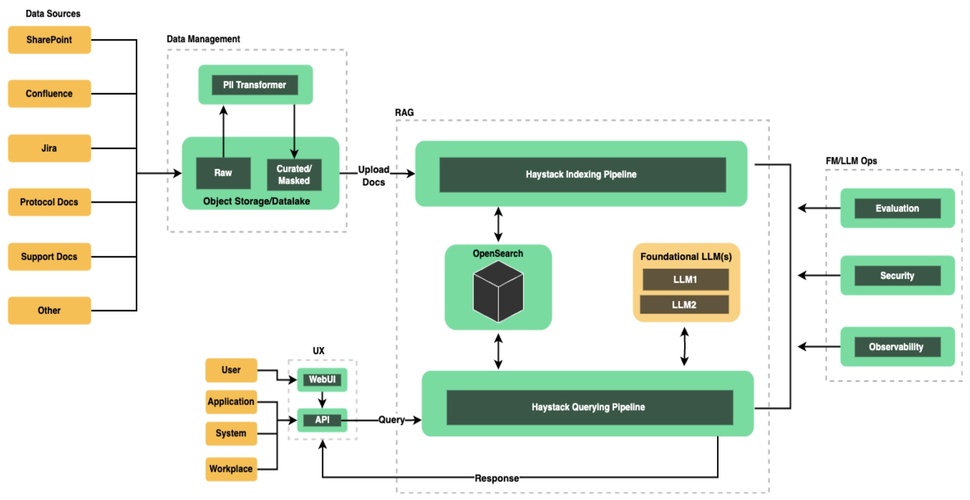

Designed and prototyped RAG pipelines using Haystack 2 to enable secure, context-aware LLM applications. Built custom evaluation workflows with DeepEval to measure response quality and retrieval accuracy, integrated Ollama’s LLaMA 3.1 8B model for local inference, and leveraged Presidio to detect and anonymize PII within retrieved documents. Developed the system in FastAPI with dependency injection for metadata handling, packaged using Poetry, and tracked experiments in MLflow. Lead security engineer responsible for architecture, experimentation, and developer enablement.

Impact

Delivered a secure, privacy-aware RAG framework that enabled explainable, high-quality responses while protecting sensitive data. Accelerated development of enterprise-grade GenAI applications by providing reusable pipeline components, automated evaluation metrics, and strong privacy guarantees.

Technologies, Frameworks, and Artifacts

- Haystack 2

- DeepEval

- Ollama (LLaMA 3.1 8B)

- Presidio PII masking

- FastAPI and Poetry

- MLflow experiment tracking